Preface

Before diving in, it’s important to be clear about the impact.

This incident did not cause any data breaches, data loss, or security concerns for our clients or systems. The AI-generated site was intentionally used in a low-risk context - a standalone marketing landing page with no customer data, no authentication, and no connection to production systems.

This was a deliberate decision. We chose to experiment with AI in an environment where the blast radius was minimal and the consequences of failure were contained.

The issue was limited to unexpected redirects caused by a vulnerable third-party package. It was detected quickly, resolved promptly, and posed no risk beyond the landing page itself.

The lesson here isn’t fear - it’s intent, boundaries, and responsibility when choosing where and how AI is used.

We Got Hacked

We recently did what a lot of businesses are experimenting with right now - we used an AI generator to build a landing page.

It was quick.

It was simple.

It was easy to use.

And within minutes, we had a fit-for-purpose landing page that would normally have taken days to design, build, and deploy.

So far, so good.

But… we got hacked.

What happened

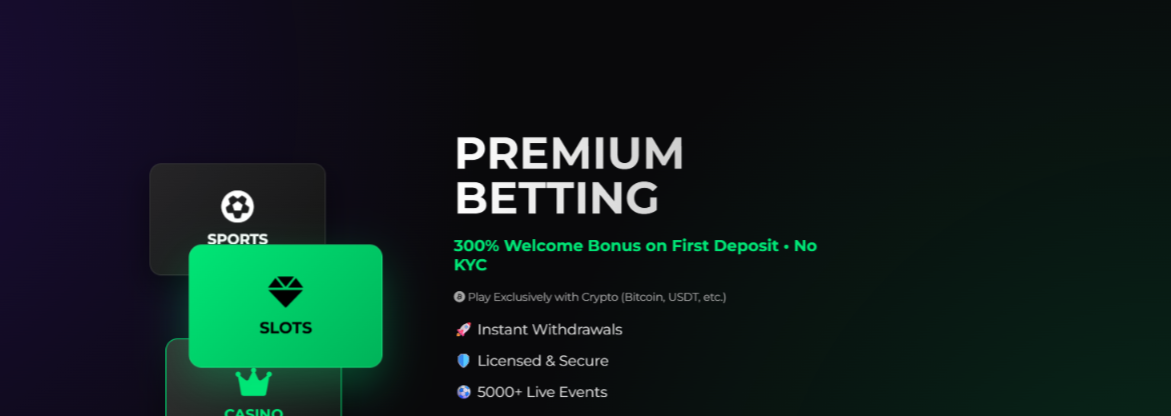

Yesterday, we noticed something odd. Any time we clicked a link on the site - any link - we were redirected to an offshore gambling page.

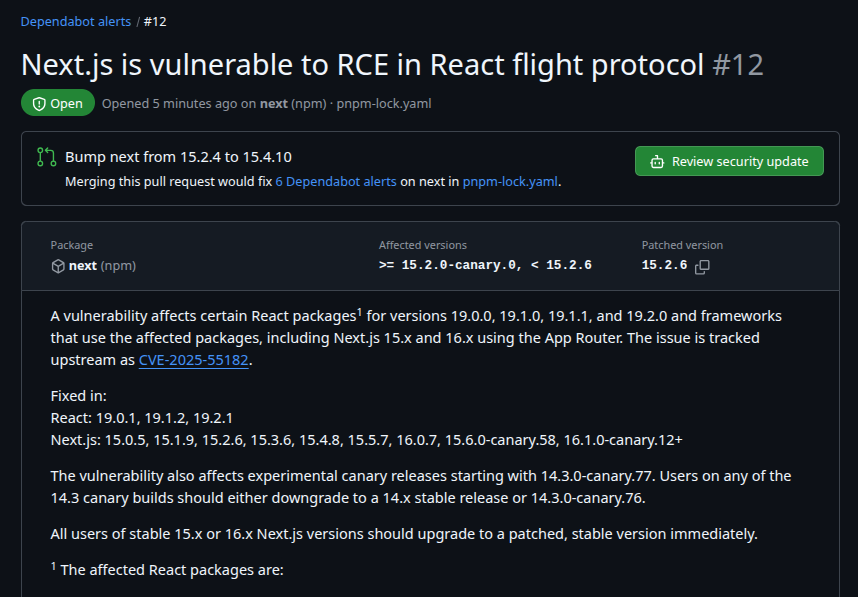

The site itself hadn’t changed visually. There were no obvious red flags. But under the hood, the site had been compromised via an underlying package that was affected by a known security flaw.

This wasn’t a targeted attack.

This was collateral damage.

Why it didn’t get worse

The anomaly was picked up within a few hours. We use this landing page every day, so the behaviour stood out immediately.

From there, we were able to:

- Identify what had changed

- Trace the issue back to its source

- Isolate the vulnerable package

- Patch and remove the issue

We had a fix ready to go quickly.

But here’s the important part:

That only happened because we knew what we were doing.

We had:

- The tools to inspect and audit the code

- The experience to recognise abnormal behaviour

- The ability to re-engineer the landing page

- The knowledge to isolate and remove the problem safely

And that led us to a much bigger question…

What if you don’t have that capability?

What if you’re a company using AI-generated tools or platforms and:

- You don’t know what dependencies are being pulled in

- You can’t easily inspect the generated code

- You don’t have anyone who can identify what “normal” looks like

- You don’t know how to respond when something goes wrong

In that scenario, how long would the issue have gone unnoticed?

Days?

Weeks?

Months?

And what would it have cost - in trust, reputation, SEO, or compliance - before anyone realised?

AI is powerful - but it’s not magic

I’m genuinely enjoying playing with AI tools. The speed. The creativity. The possibilities. The productivity gains are real, and they’re exciting.

But just because you can, doesn’t mean you always should.

AI can generate code, websites, workflows, and systems - but it doesn’t remove responsibility. Someone still needs to understand, verify, maintain, and secure what’s being produced.

A word of caution

If you’re going to use AI tools to build production assets:

- Make sure you understand what’s being generated

- Know what dependencies you’re relying on

- Have monitoring in place

- And critically - have access to an expert when things go wrong

If you don’t have that capacity internally, at least ensure you have someone available who does.

Because AI can move fast - but so can the consequences when something breaks.

And we were lucky this time.